In Part 1 of the Ontology series, we discussed how unstructured data can be structured and transformed into meaningful data assets. In Part 2, we explained why ontology becomes a core structure that elevates LLM based AI and AI Agents to a practical, production ready level.

This third and final part focuses on the last step. It covers how data with clearly defined meaning and ontology can be connected to real business execution, and how this can be implemented within a single platform.

To solve problems in real world operations, multiple steps are required from data to action.

Data is scattered across different databases, APIs, and files. It must be organized and structured before analysis, decision making, and execution become possible.

CommerceOS (COS) connects this entire chain within a single platform.

As an ontology and AI Agent based end to end problem solving platform, COS aims to seamlessly connect data ingestion to execution.

How COS connects data to action

The structure of COS is simple.

It connects data, assigns meaning, enables agents to work, turns results into views, and ultimately carries them through to real execution.

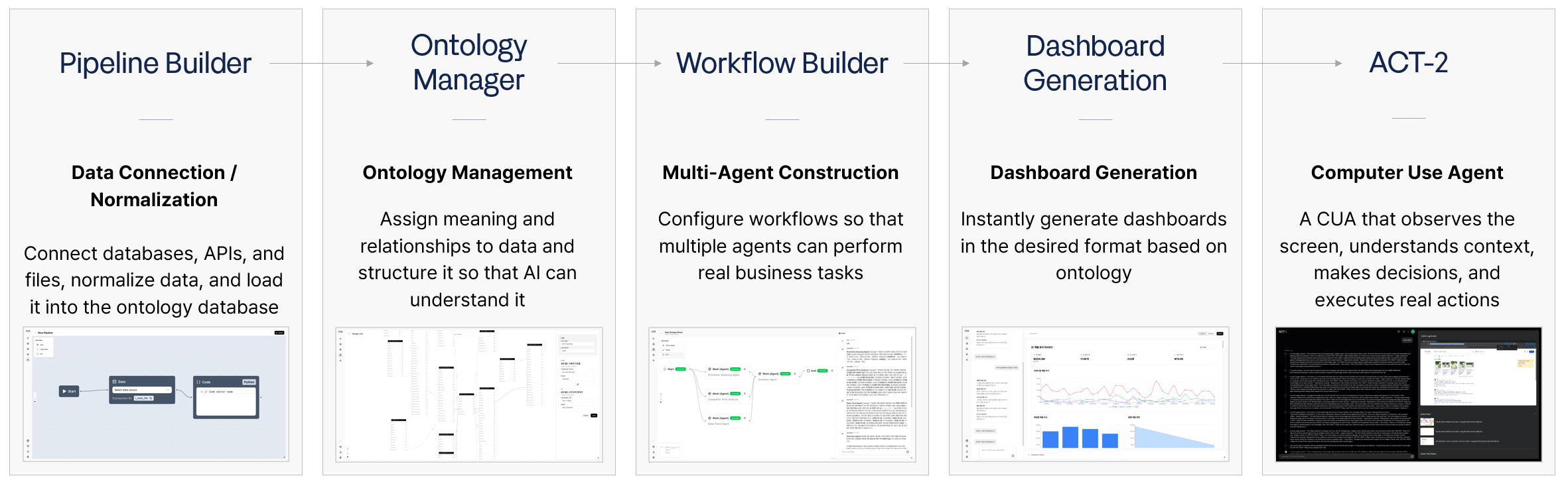

Below is a written explanation of the five products shown in the image.

1. Pipeline Builder, the data connection and normalization layer

Pipeline Builder connects customer databases, APIs, and files, ingests the data, and normalizes it so that the next layer can use it immediately.

The key point here is that it does not only perform structured data ETL.

- Structured data is organized through ETL and normalization

- Unstructured data is structured through text, image, and table recognition

The resulting data then flows in two directions.

- Loaded into ontology objects

- Stored separately as knowledge base data

In other words, Pipeline Builder is not simply a data ingestion function, but a preprocessing and structuring layer that transforms data into a form AI can work with.

2. Ontology Manager, the meaning and relationship design layer

Ontology Manager assigns meaning and relationships to data so that AI can understand it in a stable and consistent way.

Rather than explaining ontology in abstract terms, this definition is more precise.

- Ontology Manager is a design tool that “translates data into a language AI can understand.”

Based on the image, Ontology Manager includes the following.

- Object lists that organize data into business level units, with LLMs optionally generating initial semantic drafts

- Relationship link management that defines relationships between objects and establishes the basis for navigation, querying, and reasoning

- Knowledge base management for ground truth, guidelines, and rules

- Test cases and data validation to verify stability in real usage scenarios

Up to this point, COS focuses not on simply producing correct answers, but on establishing criteria that keep answers from becoming unstable. This foundation is essential for agentic AI systems, where autonomous agents must reason and act consistently over time.

3. Workflow Builder, the multi agent orchestration layer

Workflow Builder is the execution layer that constructs workflows so that multiple agents can perform real business tasks.

The core is not how many node types exist, but how to safely orchestrate what agents see and which tools they use to execute actions.

Based on the image, the key components are as follows.

- Ontology object connections that clearly define which data is used as evidence

- Knowledge base connections that incorporate operational standards and rules

- MCP and tool connections that link external system queries and executions for real task performance

- Conditional handling that safely manages exception flows through HITL and conditional logic

In summary, Workflow Builder is not a tool that expands what multi agents are capable of doing, but a tool that ensures they execute in ways that are acceptable in real world operations.

4. Dashboard Generation, the ontology based view and dashboard layer

Dashboard Generation enables the instant creation of dashboards based on ontology and natural language user queries.

The focus here is not one time generation, but ongoing operation.

- Dashboards generated based on ontology and natural language queries

- Generated views can be edited, deployed, and operated as reusable assets

Rather than stopping at dashboard creation, COS aims to accumulate dashboards as operational assets.

5. ACT-2, the Computer Use Agent based execution layer

ACT-2 is a Computer Use Agent (CUA) that observes screens, understands context, makes decisions, and executes real actions.

While most analytics tools stop at insight, COS includes execution as a core part of its product architecture.

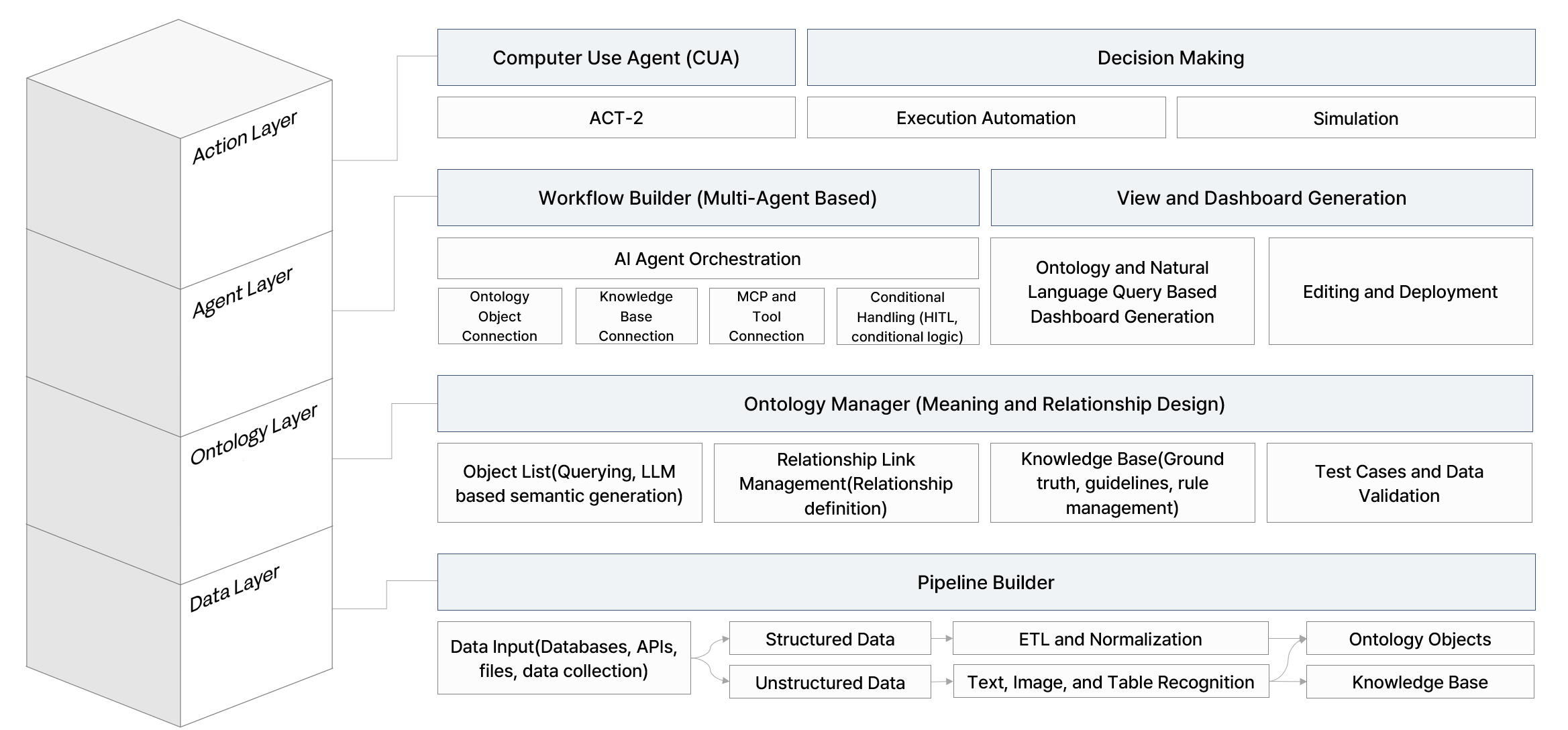

COS End-to-End Architecture Structure

When the five products above are reorganized from an architectural perspective, they can be summarized as follows.

- Data Layer

- Data input from databases, APIs, files, and collection sources → structured ETL and normalization, unstructured text, image, and table recognition → loaded into ontology objects and the knowledge base

- Ontology Layer

- Ontology Manager designs meaning and relationships, while the knowledge base and test cases and data validation ensure reliability

- Agent Layer

- Workflow Builder handles multi agent orchestration, connects ontology, the knowledge base, MCP, and tools, and ensures stability through conditional handling including HITL

- Action Layer

- ACT-2 (CUA) handles real execution and can be extended into higher level decision making functions such as execution automation and simulation

This structure allows COS to connect data ingestion to execution in a single flow, while each layer shares the same underlying assumptions around data structure, semantic criteria, and execution safeguards.

From ontology to execution, the final form of AI that COS aims for

The core message consistently conveyed throughout the ontology series is simple.

AI performance is determined not by the model alone, but by data, semantic structure, and execution design.

COS implements this structure within a single platform.

From connecting and organizing data,

to designing meaning and relationships,

to binding multi agents into real operational processes,

to transforming dashboards into reusable operational assets,

and finally, to execution itself.

COS is not so much a product that's obsessed with using the latest version of AI, but rather a platform designed to ensure AI executes safely and consistently in real world operations.

This is why COS is introduced at the conclusion of this three part series.

Ontology is no longer a concept that ends at explanation. It is a structure that, when combined with AI Agents, can lead directly to real business execution. COS represents one concrete answer by implementing that structure end to end.

From connecting and organizing commerce and retail data, to designing meaning and relationships, to binding multi agents into execution processes, to turning dashboards into operational assets, and finally to execution.

Solving all of these stages within a single COS platform represents Enhans’s vision for how digital labor should be automated.

.png)

in solving your problems with Enhans!

We'll contact you shortly!