In Part 1 of ontology article series, we covered how unstructured data can be "structured" through an ontology.

In Part 2, we go one step further and explain why ontology becomes a core structure that elevates LLM based AI to an execution ready, production level system.

1. The Era of LLMs and the Limitations That Surface in Practice

Since the emergence of ChatGPT, Claude, and Gemini, AI adoption has accelerated rapidly. Asking questions in natural language and receiving results is now the default in most work environments. The strengths of LLMs are clear. A single model can handle summarization, translation, Q and A, classification, and many other language tasks, and it can be applied immediately via API without building a separate training pipeline.

The issues appear in production. LLMs generate highly plausible answers, but they do not guarantee whether the output is factually correct, numerically consistent, or compliant with company rules. A representative failure mode here is hallucination.

In domains like commerce, healthcare, and law where accurate numbers, reliable information, and consistent judgment are essential, this weakness directly becomes a constraint on real world deployment.

2. The Key to Moving Beyond LLM Limitations: Structuring Knowledge

For an LLM to function as an "agent" in real operations, it must go beyond language generation and be grounded in a team’s operational know how and a high level of data reliability.

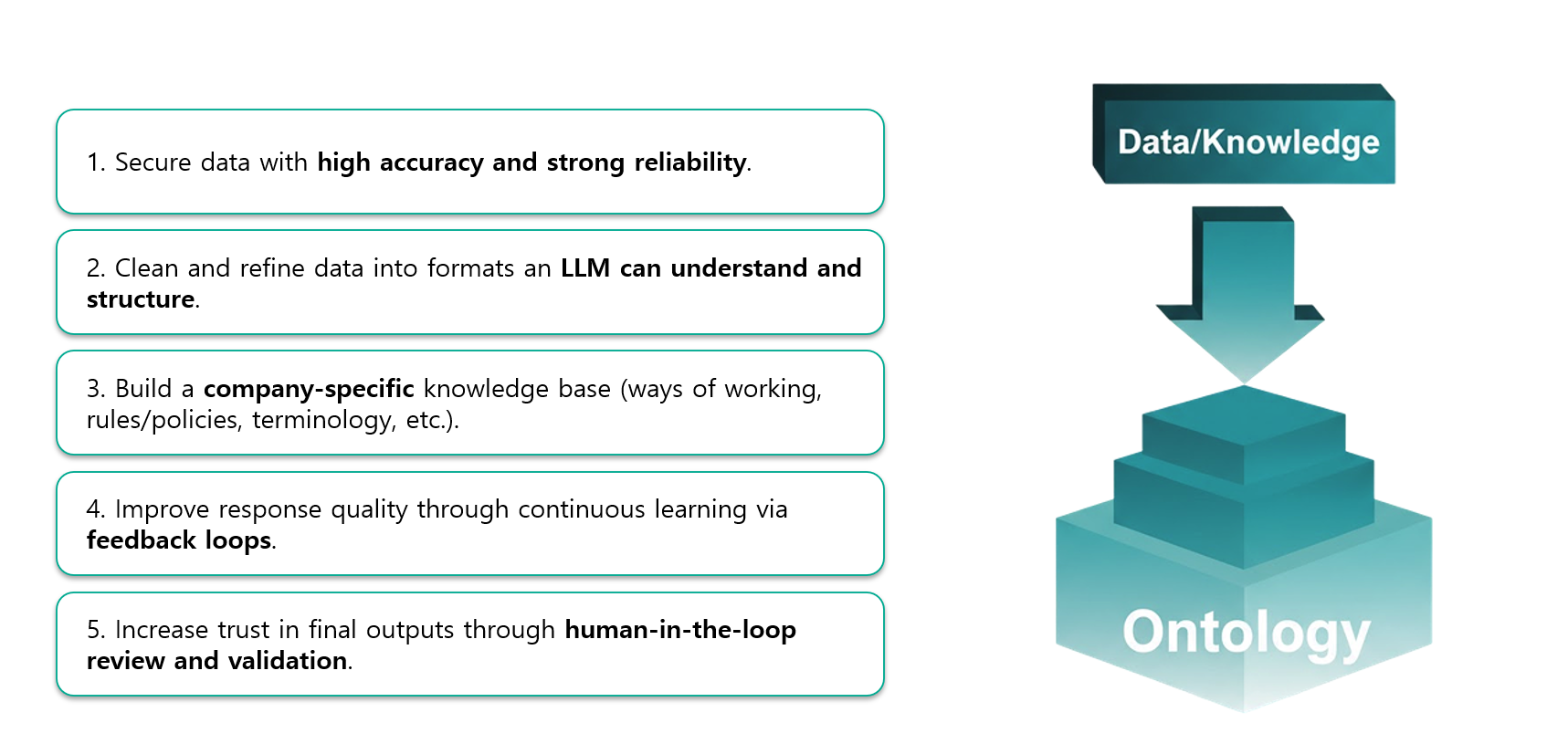

To make AI perform real work, the following elements are required:

- Securing highly accurate and trustworthy data.

- Refining data into a form that an LLM can understand and structure.

- Building a custom knowledge base that reflects each company’s operating style, rules, and terminology.

- Improving quality through continuous feedback learning (Feedback Loop) and human verification (Human in the loop).

The technology that satisfies these requirements and teaches AI the "context of the world" is ontology.

3. Ontology as a Layer That Elevates Data into a Knowledge Structure

The term ontology originates from Aristotle’s metaphysics, a philosophical concept aimed at answering fundamental questions. In the AI era, ontology extends that idea into data processing systems.

Technically, ontology makes the following explicit.

- What "entities" exist

- What "properties" those entities have

- What "relationships" exist between entities

If databases and schemas are structures that "store values", ontology defines the meaning of values and how they connect. This difference matters because LLMs reason far more reliably when relationships and constraints are provided explicitly.

For example, if you ask why review ratings dropped, an LLM may produce a plausible explanation that is still hallucinated and disconnected from the true cause. But if an ontology connects the stages of "product, promotion, shipping, customer service, review, repurchase" and defines the properties and rules at each stage, the answer shifts into evidence based insights that can be applied directly in real operations.

4. Enhans Meets Palantir: A Hybrid Semantic Approach

Palantir, a global data company, uses ontology as a strategy to unify complex business processes. Enhans was selected for Palantir’s Startup Fellowship, where we learned Palantir’s ontology philosophy directly at headquarters and built solutions based on it.

Palantir’s core capability lies in a hybrid approach that integrates relational databases (RDB) and vector databases. This allows legacy modeling and knowledge graphs to be combined, creating a foundation for AI agents to perform intelligent semantic analysis.

Building on this philosophy, Enhans developed a proprietary E2E (End to End) ontology solution optimized for commerce environments.

5. The Practical Difference: Ontology vs RAG (Retrieval Augmented Generation)

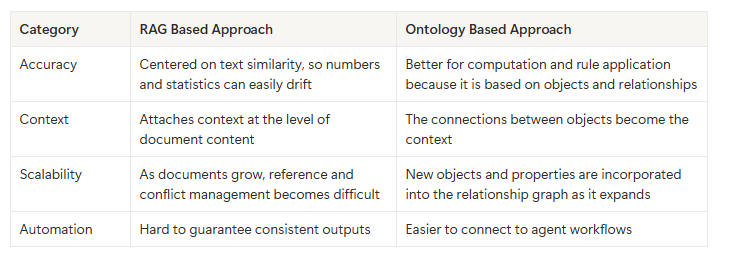

RAG (Retrieval Augmented Generation), a popular approach in today’s AI landscape, is a hybrid method where an LLM answers by retrieving documents similar to the question from a vector database. However, because RAG is based on probabilistic similarity, it has clear limitations in commerce environments where exact numbers matter.

In practice, when we ask "Analyze this month’s revenue and review trends" through Enhans CommerceOS, RAG based AI often returns only approximate figures. Ontology based AI produces exact totals (down to the won), daily trends, promotion impact, and deeper insights with precise numbers.

6. The Four Components of the Enhans Ontology

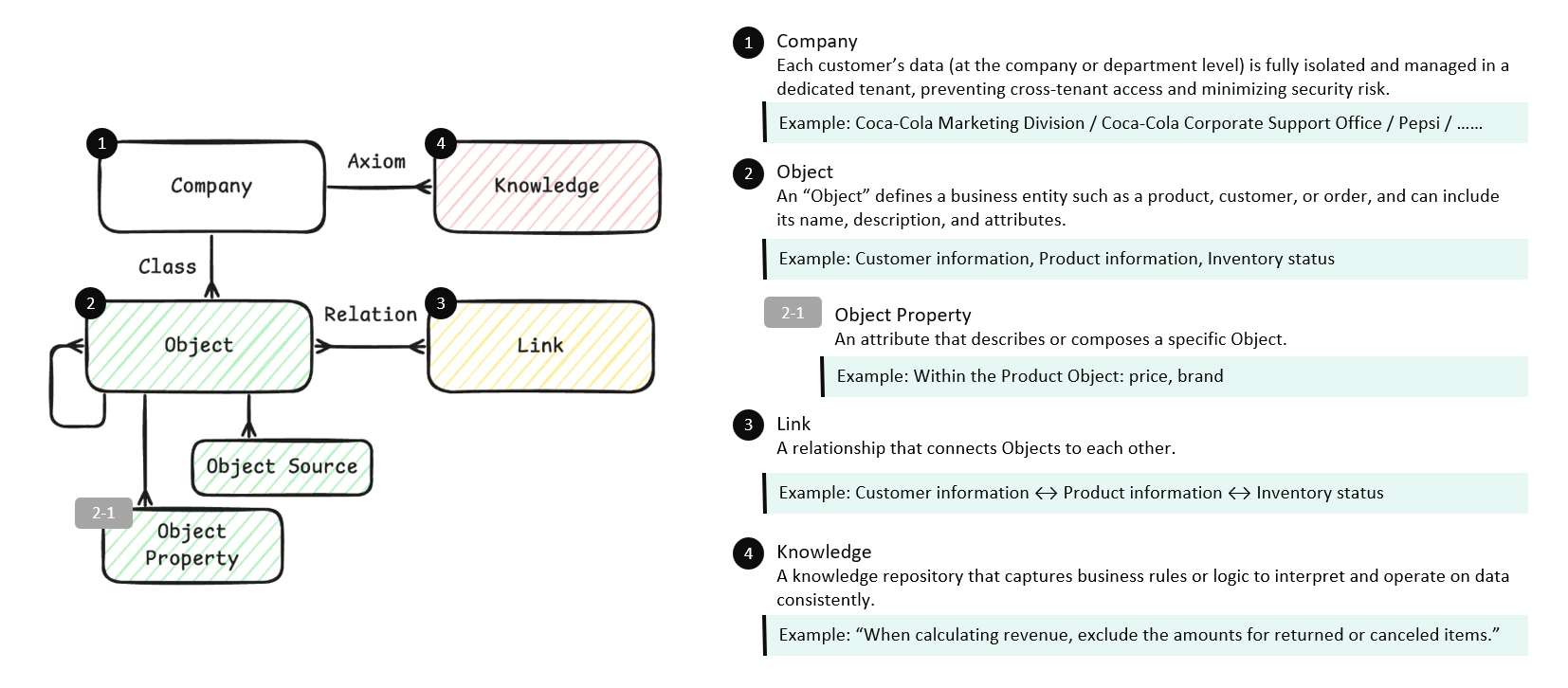

The Enhans ontology is built around four core concepts:

- Object: Defines target concepts such as products, customers, and orders, including name, description, and attributes.

- Property:

- • Defines the specific characteristics and states of an object. It includes structured data such as price, quantity, category, creation date, and status values, as well as unstructured and derived properties such as semantic descriptions, summaries, and classification outputs generated by LLMs. This allows the same object to be interpreted and used at multiple levels depending on analytical goals and context.

- Link: Defines connections between objects to surface hidden context and meaning. For example, it can reveal derived relationships across "revenue, promotion, review".

- Knowledge: Captures business logic, constraints, and inference rules. Company specific rules such as "Exclude canceled item amounts when calculating revenue" are included here.

In particular, Enhans manages customer data in a completely isolated way at the tenant level, which provides a strong advantage in building company specific knowledge graphs without security concerns.

7. The Key to Deployable AI in Enterprise Environments Is the "Framework"

As LLM performance improves, the more important question becomes:

"What does this model reference, and what rules does it use to make decisions?"

The era of making decisions by looking at only fragments of massive data or relying on experience is over. Ontology ties scattered data into a single knowledge network, becoming an essential engine that enables AI to evolve from a simple tool into a core business partner.

Ontology is like an intricate map that carefully categorizes a messy library by topic, author, and content, and then connects related books with threads. In an ocean of knowledge where it is easy to get lost, it becomes a compass that helps AI find the most accurate and fastest path.

If this post focused on structuring AI knowledge through ontology, Part 3 will share how "work gets executed" on top of that structure, using Enhans CommerceOS as a concrete case.

.png)

in solving your problems with Enhans!

We'll contact you shortly!