How Do We Evaluate AI Models?

Evaluating the performance of AI models has long been a key challenge in both industry and academia. The most common method has been to give models exam-style questions and measure how many correct answers they produce. Well-known benchmarks such as MMLU and GSM8K have been widely used to evaluate knowledge and reasoning skills.

Yet this approach has clear limitations. What people expect from AI is not simply solving test problems, but demonstrating real-world task execution. Searching for information, navigating websites, clicking the right buttons, and completing a goal are all skills that static benchmarks cannot fully capture.

This is why web-based AI evaluation has emerged, with Mind2Web at the center.

What is Mind2Web?

Mind2Web is a globally recognized web-based AI Agent evaluation benchmark introduced by the NLP group at Ohio State University at NeurIPS 2023. As its name suggests, it measures how well an AI can think and act in real web environments.

The benchmark contains more than 2,000 tasks across 30 web domains. Rather than testing text-only problem solving, Mind2Web requires agents to perform realistic interactions such as clicking, navigating, entering data, and completing multi-step workflows. This makes it one of the most effective tools for evaluating whether an AI can operate as a true Agentic AI Agent in practice.

Mind2Web has already become a standard for AI Evaluation and AI Performance comparison. Research such as WEPO, Synatra, and Auto-Intent adopted Mind2Web as a benchmark for validating their models, while Mind2Web-2 expanded evaluations to include both commercial and closed-source systems. As a result, Mind2Web is now widely recognized as a global benchmark for AI Evaluation across academia and industry.

Online-Mind2Web: A Harder Evaluation Environment

Building on this foundation, Online-Mind2Web offers an even more challenging setting.

- Online-Mind2Web includes 300 tasks across 136 web domains.

- While the original Mind2Web used cached static webpages, Online-Mind2Web evaluates models on live websites.

- This introduces greater complexity with factors like cookies, pop-ups, and changing layouts, creating a significantly harder benchmark.

By moving beyond static environments, Online-Mind2Web enables evaluations that more closely reflect real-world conditions, making it an important standard for testing the true capabilities of AI Agents.

How Mind2Web Evaluates AI

What makes Mind2Web and Online-Mind2Web distinctive is that they evaluate not just the correctness of an answer, but the process an AI follows to achieve a goal. For instance, in a task like “book a hotel,” the model is not only expected to provide an answer. It must find the search bar, select dates, and click the booking button in the right sequence.

The core innovation lies in using real websites to define tasks. While older benchmarks relied on simplified simulated environments, Mind2Web reflects real domains such as e-commerce, travel, and information retrieval. This allows researchers to measure how useful an AI Agent would be in practice.

Another key feature is the Agent-as-a-Judge framework. Instead of requiring human annotators to score every attempt, another AI agent acts as the evaluator. This makes large-scale testing more efficient while maintaining strong alignment with human judgment.

Together, Mind2Web and Online-Mind2Web have become standards for AI Evaluation and AI Performance benchmarking and serve as bridges between Agentic AI research and real-world industrial applications.

The Performance of Enhans ACT-1

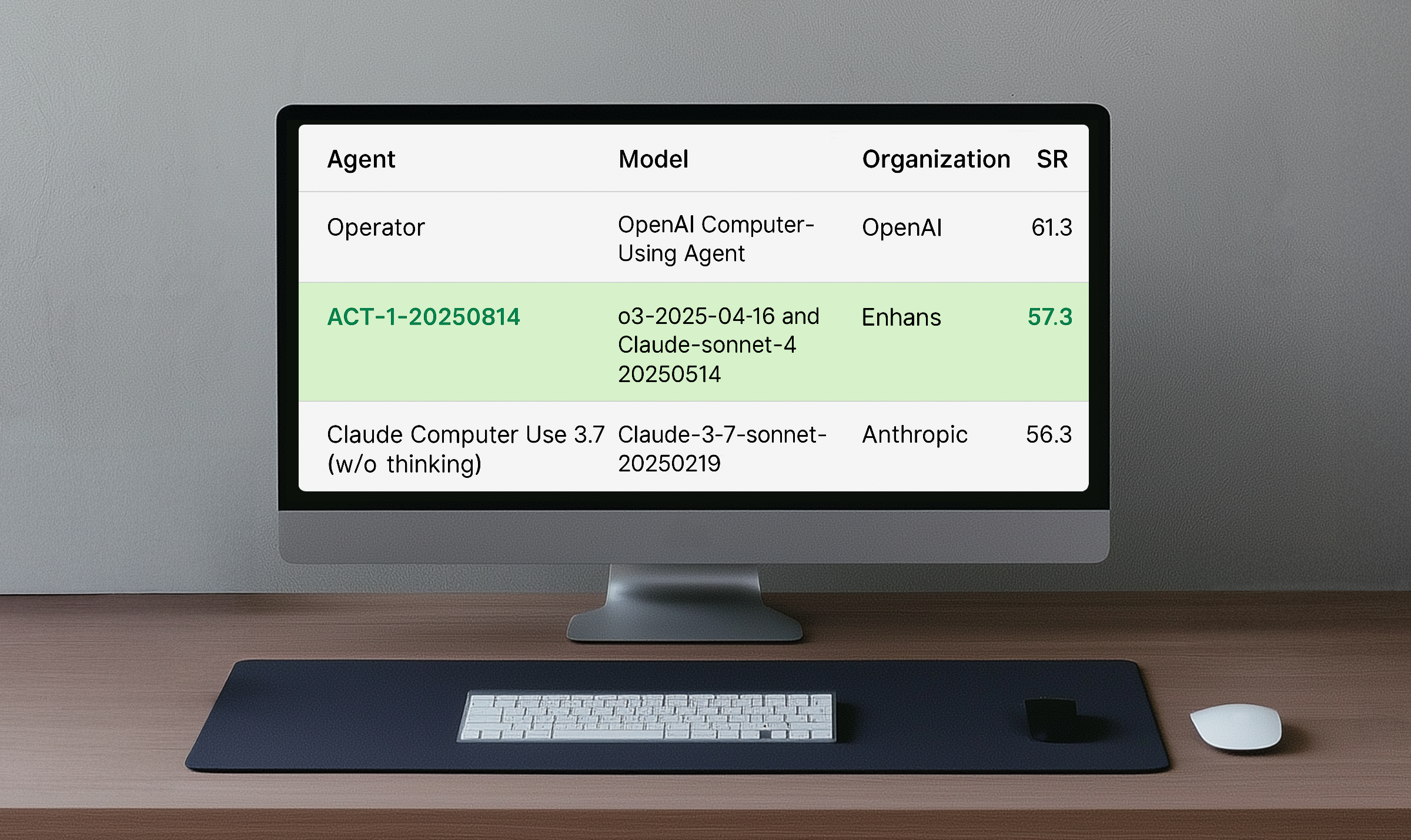

In the recent Online-Mind2Web evaluation, Enhans’ proprietary ACT-1 model ranked second overall. What makes this result especially meaningful is that ACT-1 was originally designed as a Commerce AI Agent, yet it excelled in a benchmark that covers a wide range of general-purpose tasks. This demonstrates that ACT-1 is not only strong within commerce but also competitive as a general Agentic AI model.

ACT-1 is also differentiated by its focus on reusability. Some AI Agents, such as OpenAI’s Operator or Anthropic’s Claude, rely on coordinate-based actions. This makes it difficult to record and reuse action sequences when page layouts shift or content changes. Enhans ACT-1 instead uses a DOM-based approach, which allows action data to be stored and reused. As a result, it adapts more reliably to layout changes and performs tasks more flexibly, much like a human navigating the web.

This achievement is more than just a ranking. Competing against the largest research-driven models, Enhans has demonstrated the real-world potential of AI Agents in a benchmark recognized by both academia and industry.

The Future of Commerce AI Agents Through Mind2Web

The move toward evaluating AI Performance in real-world web environments, rather than on static test problems, will only continue to grow. At the forefront of this trend, Mind2Web and Online-Mind2Web have already become global standards for AI Evaluation and are likely to remain the key benchmarks for measuring the true capabilities of Agentic AI.

In this context, the success of Enhans’ ACT-1 model is especially noteworthy. Despite being designed as a Commerce AI Agent, ACT-1 ranked second globally in a general-purpose benchmark and proved through its DOM-based approach that it can store, reuse, and adapt actions like a human. This shows that ACT-1 is not just a research project, but a Commerce AI Agent ready for real business environments.

Looking ahead, Enhans plans to expand beyond ACT-1 with specialized agents such as the Price Agent, QA Agent, and CS Agent. Each will be designed to create measurable business value while pushing forward the frontier of practical Agentic AI. The achievement on Online-Mind2Web is only the beginning.

Enhans’ ACT-1 model is moving into real business applications.

.png)

in solving your problems with Enhans!

We'll contact you shortly!